Mole or cancer? The algorithm that gets one in three melanomas wrong and erases patients with dark skin

The Basque Country is implementing Quantus Skin in its health clinics after an investment of 1.6 million euros. Specialists criticise the artificial intelligence developed by the Asisa subsidiary due to its "poor” and “dangerous" results. The algorithm has been trained only with data from white patients.

Time is money. Especially for melanoma, the most dangerous skin cancer: diagnosing this tumour as soon as possible is more decisive in saving lives than in any other cancer. In Spain, it is estimated that in 2025 there will be around 9,400 cases of melanoma, a very aggressive cancer which can spread rapidly and metastasise in just a few months. When this happens, the prognosis is usually poor, so detection errors can be fatal.

It is precisely this urgency that has led the Basque Country to commit to artificial intelligence. In 2025, the Basque health system, Osakidetza, is working on incorporating Quantus Skin, an algorithm designed to diagnose the risk of skin cancer, including melanoma, at its primary health clinics and hospitals. In theory, it promises to speed up the process: general practitioners will be able to send images of suspicious lesions to the hospital’s dermatology service, along with the algorithm’s estimate of their being malignant. The Basque government’s idea is that Quantus Skin, which is currently being tested, will help prioritise patients for treatment.

A 1.6 million euro public contract for Asisa

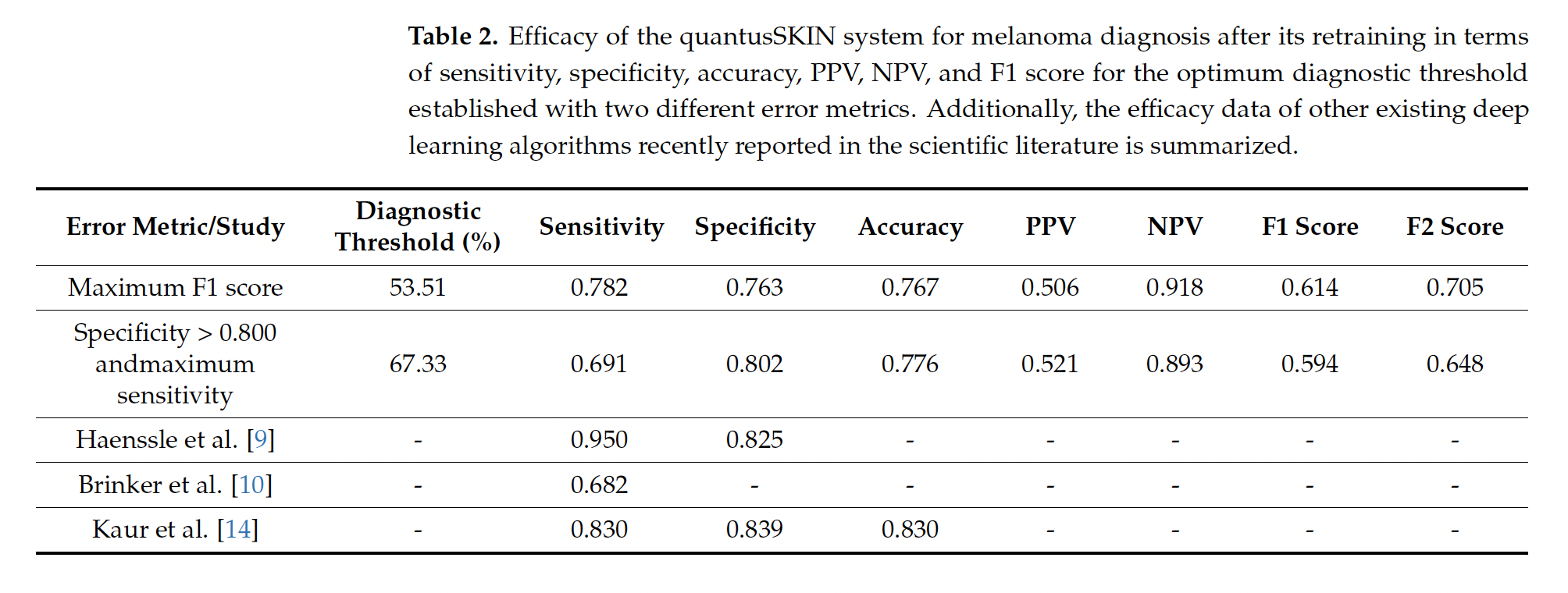

In 2022, the Basque Health Service, Osakidetza, awarded a €1.6 million contract to Transmural Biotech to implement “artificial intelligence algorithms in medical imaging,” which required achieving a sensitivity and specificity of “at least 85%.” The company, which was created as a spin-off from the University of Barcelona and Hospital Clínic, belongs to the private insurance company Asisa. Although the specifications included several types of cancer and other diseases, Osakidetza only selected two algorithms, including Quantus Skin, due to its “greater healthcare impact” and to “obtain a higher return on health.” The decision, moreover, was taken unilaterally, without consulting specialists, Civio has learned. In February, Osakiteza stated that Quantus Skin had passed the “validation phase” and was in the “integration phase.” In a more recent response to queries from Civio, the service now says that it continues testing the algorithm and will take decisions “accounting for the results we obtain.” However, the service did not address the fact that the published clinical results of Quantus Skin (69.1% sensitivity and 80.2% specificity) are below the 85% threshold set by the public contract. Apart from the award in the Basque Country, Transmural Biotech has only one other public contract, in Catalonia, for a much smaller amount (25,000 euros) to certify artificial intelligence algorithms in radiology.

However, the data are troubling. Transmural Biotech, the company that markets Quantus Skin, conducted an initial study with promising results, but it had significant limitations: it was conducted entirely online and was not published in any academic journal, meaning that it did not undergo the usual quality control required in science.

Later, dermatologists from Ramón y Cajal Hospital and professors from Complutense University in Madrid conducted a second study, which was published, to evaluate the actual clinical efficacy of Quantus Skin. This study, which received funding and technical assistance from Transmural Biotech, showed worse results: the algorithm misses one in three melanomas. Its sensitivity is 69.1%, which means that it misses 31% of actual cases of this potentially lethal cancer.

When Civio contacted the CEO of Transmural Biotech, David Fernández Rodríguez, he responded evasively by email: “I don’t know what it is right now.” After insisting on the phone, he changed his story: “What we were doing was testing,” to detect possible implementation problems. And, at the end of the call, Fernández Rodríguez acknowledged that Quantus Skin “didn’t stop working, it just worked much worse, but we had to figure out why.”

Fernández Rodríguez attributes these poorer results to deficiencies in image capture due to not following Quantus Skin’s instructions. This is something they have also seen in trials in the Basque Country: “Primary care doctors are not well trained to take the images,” he says, which implies a need to “train the doctors.” However, the second study involved dermatologists who specialise precisely in photographing suspicious lesions for subsequent diagnosis. According to Fernández Rodríguez, reliability improved after “cropping the images properly” because “they were not following the instructions exactly.”

Independent sources criticise the diagnostic tool

“For skin cancer, having sensitivities of 70% is very bad. It’s very poor. If you give this to someone to take a photo and tell you if it could be a melanoma and they are wrong in one out of three, it is inappropriate for skin cancer screening in a primary setting. You have to demand more,” explains Dr Josep Malvehy Guilera, director of the Skin Cancer Unit at the Hospital Clínic in Barcelona. “A 31% false negative rate sounds dangerous to say the least,” says Dr Rosa Taberner Ferrer, dermatologist at the Son Llàtzer Hospital in Mallorca and author of Dermapixel: “As a screening test it’s crap.”

However, Fernández Rodríguez attempts to downplay the problem by focusing only on data that favours his product, avoiding mention of Quantus Skin’s low sensitivity. Quantus Skin fails in two ways: according to the same study, its specificity implies a 19.8% false positive rate, i.e. it mistakes one in five benign moles for melanoma. This could lead to an unnecessary referral of around 20% of screened patients.

In the study, the authors - dermatologists at the Ramón y Cajal Hospital in Madrid and optics professors at the Complutense University of Madrid - argue that it is preferable for Quantus Skin to have a higher specificity (fewer false positives) even at the cost of a lower sensitivity (more false negatives) because it is not used for definitive diagnoses. It is just a screening tool, to refer cases from primary care. They hypothesise that this can prevent specialist consultations from overcrowding, reducing waiting times and lowering medical costs.

“If it misdiagnoses melanoma in lesions with a potential risk of growing rapidly and even causing the death of the patient, then I must be very intolerant. I must demand sensitivities of at least 92%, 93%, 94%.”

Specialists consulted by Civio disagree. Although there is no ideal standard for cancer diagnosis - partly because it depends on the aggressiveness of each tumour - what Quantus Skin has achieved is far from acceptable. “If it misdiagnoses melanoma in lesions with a potential risk of growing rapidly and potentially even causing the patient’s death, then I must be very intolerant. I expect sensitivities of at least 92%, 93%, 94%,” says dermatologist Malvehy Guilera of the Hospital Clínic in Barcelona.

“If they intend to use it for screening, then the system should have a super high sensitivity at the expense of a slightly lower specificity,” explains Taberner Ferrer. In other words, it is preferable for an algorithm like this to be overly cautious: better to err a little by generating false alarms in healthy people than to miss a real case of cancer.

Dark skin, uncertain diagnosis

The problems with Quantus Skin go beyond its low sensitivity. The paper only evaluated its efficacy in diagnosing melanoma, but did not look at other more common but less aggressive skin cancers, such as basal cell carcinoma and squamous cell carcinoma, where Quantus Skin can also be applied. The authors also did not study how skin colour affects the performance of the algorithm, although they acknowledge that this is one of the main limitations of their research.

The diversity that Quantus Skin neglects

At the beginning of 2025, the Basque Country had 316,942 people of foreign origin, according to data from the Ikuspegi Basque Immigration Observatory. More than 60,000 came from the Maghreb and sub-Saharan Africa, while nearly 164,000 people came from Latin America, where there is a great variability of skin tones. That is not counting people born in Spain with foreign ancestry who reside in the Basque Country, such as the well-known footballers Iñaki and Nico Williams.

Quantus Skin, based on neural networks, has learned to recognise skin cancer almost exclusively in white people. The algorithm was first fed with just over 56,000 images from the International Skin Imaging Collaboration (ISIC), a public repository of medical photographs collected mainly by Western hospitals, where the majority of patients have fair skin. Subsequently, Quantus Skin was re-trained using images of 513 patients from the Ramón y Cajal Hospital in Madrid, all of whom were white.

The data set used to train Quantus Skin includes images “of Caucasian males and females,” Fernández Rodríguez says. “I don’t want to get into the issue of ethnic minorities and all that, because the tool is used by the Basque Country, by Osakidetza. What I am making available is a tool, with its limitations,” Fernández Rodríguez says. Despite the lack of training on darker skins, the Basque government says it is not necessary to “implement” any measure “to promote equality and non-discrimination,” as stated in the Quantus Skin file in the Basque Country’s catalogue of algorithms and artificial intelligence systems. However, as the neural networks have been trained almost exclusively on images of white people, they are likely to fail even more on darker skins, such as individuals of Roma ethnicity or migrants from Latin America and Africa.

“Algorithms are so easily fooled.”

“Algorithms are so easily fooled,” says Adewole Adamson, professor of dermatology at the University of Texas, who warned in 2018 of the discrimination that artificial intelligence could lead to if it was not developed in an inclusive and diverse way.

His predictions have been confirmed. In dermatology, when algorithms are fed mainly with images of white patients, “diagnostic reliability in dark skin decreases,” says Taberner Ferrer. The precision of the Skin Image Search algorithm, from the Swedish company First Derm, trained mainly on photos of white skin, dropped from 70% to 17% when tested on people with dark skin. More recent research has confirmed that such algorithms perform worse on black people, which is not due to technical problems, but to a lack of diversity in the training data.

Although melanoma is a much more common cancer in white people, people with dark skin have a significantly lower overall survival rate. American engineer Avery Smith knows these figures well. His wife, Latoya Smith, was diagnosed with melanoma only a year and a half after getting married. “I was so surprised by the survival rates listed for people with the same diagnosis as my wife, and by how they were dependent on race. My wife and I are both Black American and we were at the bottom of the survival rate. I didn’t know until it hit me like a bus. That’s scary as hell,” he tells Civio. Some time after the diagnosis, in late 2011, Latoya died.

Since then, Smith has been working to make dermatology more inclusive and to ensure that algorithms do not amplify inequalities. To remind us of the impact they can have, especially on vulnerable groups, Smith rejects talking about artificial intelligence as a “tool,” as if it were a simple scissors: “It’s a marketing term. It’s a way to get people to grasp it who aren’t technologists. But it’s far more than just a tool.”

Legal expert Anabel K. Arias, spokesperson for the Federation of Consumers and Users (CECU), also speaks of these effects: “When thinking about using it to make an early diagnosis, there may be a portion of the population that is under-represented, and in that case, it may be wrong and have an impact on the health of the person. You can even call it harm.”

Invisible patients in the eyes of an algorithm

“People tend to put too much trust in artificial intelligence, we attribute to it qualities of objectivity that are not real,” says Helena Matute Greño, professor of experimental psychology at the University of Deusto. Any AI uses the information it receives to make decisions. If that input data is bad or is incomplete, it is likely to fail. When it is systematically wrong, the algorithm makes mistakes that we call biases. If they affect a certain group of people more than others - because of their origin, skin colour, gender or age - we call them discriminatory biases.

A review published in the Journal of Clinical Epidemiology showed that only 12% of studies on AI in medicine looked for bias. And when they did, the most frequent bias was racial bias, followed by gender and age, with the vast majority affecting groups that had historically suffered discrimination. These errors can occur if the training data are not sufficiently diverse and balanced: if algorithms learn from only part of the population, they perform worse on different or minority groups.

Errors are not limited to skin colour. Commercial facial recognition technologies fail more often at classifying black women because they have historically been trained on images of white men. Similarly, algorithms that analyse chest X-rays or predict cardiovascular disease perform worse in women if the training data is unbalanced. Meanwhile, one of the most widely used datasets for predicting liver disease is so biased - 75% of the training set is male - that algorithms using it fail much worse in women. In the UK, the algorithm for prioritising organ transplants discriminated against younger people. The reason? It had been trained with limited data, which only took into account survival over the next five years, and not the potentially much longer life that patients receiving a new organ might gain.

“The data used for training must represent the entire population in which it will be used,” explains Nuria Ribelles Entrena, spokesperson for the Spanish Society of Medical Oncology (SEOM) and oncologist at the Virgen de la Victoria University Hospital in Malaga: “If I only train it with a certain group of patients, it will be very effective in that group, but not in others.”

Age bias is especially problematic in paediatrics. “Children are not little adults. They have completely different physiology and pathological processes,” warn the authors of a journal article. Since children do not normally participate in clinical research, the situation is “a drama,” according to Antonio López Rueda, spokesperson for the Spanish Society of Medical Radiology (SERAM) and radiologist at Bellvitge University Hospital in Barcelona.

Ignasi Barber Martínez de la Torre, spokesperson for the Spanish Society of Paediatric Radiology (SERPE) and head of paediatric radiology at Sant Joan de Déu Hospital, illustrates this with a personal experience. His team tried to validate a chest X-ray model trained on adults in the paediatric population. “We soon realised that it made many more errors. The sensitivity and specificity were totally different,” he says. One of the errors was identifying the thymus, a very large gland in young children that disappears in adulthood, as a suspect. The same goes for the skeleton, which in young children has “unossified parts” that can be mistaken for fractures.

Navigating the bias obstacle course

The solution to avoid bias exists: “The training set has to be as large as possible,” explains López Rueda. But the data are not always available for independent analysis. So far, most artificial intelligence systems implemented in Spain that use medical images do not usually publish the training data. This is the case with two dermatology systems - whose names are not even public - that will first be tested in the Caudal health area and then extended to the whole Principality of Asturias. The commercial application ClinicGram, for detecting diabetic foot ulcers, in use at the University Hospital of Vic near Barcelona; and several private radiology systems, such as Gleamer BoneView and ChestView and Lunit, which are operating in the Community of Madrid, the Principality of Asturias and the Community of Valencia also fail to publish their training data.

Where training datasets are accessible, another obstacle is that they do not collect metadata, such as origin, gender, age or skin type, which would allow us to check whether the datasets are inclusive and balanced. In dermatology, most public datasets do not usually tag the origin of patients or their skin tone. When this information is included, studies consistently show that the black population is severely underrepresented. “There has been a growing awareness of the problem and developers of algorithms have tried to address these shortcomings. However, there is still work to be done to create representative training data for algorithms,” Adamson says.

The quality and quantity of available data also determines how well the algorithms work. “What made us improve our diagnostic efficiency was that we used our own imaging resources,” says Julián Conejo-Mir, professor and head of dermatology at the Virgen del Rocío University Hospital in Seville. Conejo-Mir and colleagues developed an artificial intelligence algorithm for skin cancer diagnosis and to identify the depth of melanoma, a parameter that is related to the aggressiveness of these tumours.

Its database, which includes images of nearly a thousand patients from the hospital in Seville and photographs from other repositories, has been used to design an algorithm, currently under research, with a 90% accuracy rate. But even in apparently successful systems like this one, it is difficult to train algorithms to recognise less frequent cases. This is precisely what happens with acral lentiginous melanoma, the most common skin cancer in the black population and the one Bob Marley died of when he was only 36 years old. This tumour is particularly treacherous because it appears in areas where people rarely look for suspicious lesions, such as the palms of the hands and feet or under the fingernails, as happened to Marley.

Every year, the dermatology service at the Virgen del Rocío University Hospital in Seville diagnoses around 150 cases of melanoma, of which only 2 or 3 are acral lesions. “We had to take it out of training, because we had very few cases and, if we joined it to the rest, it failed; if we separated it, we didn’t have a sufficient number of images,” says José Juan Pereyra Rodríguez, head of section at the Virgen del Rocío University Hospital in Seville.

This artificial intelligence, which is not used for clinical screening but for research purposes, cannot be applied to cases of acral lentiginous melanoma because they did not have enough data on this type of cancer to train the algorithm reliably. To achieve this, they would have needed about 50 years worth of locally available data, Pereyra Rodriguez estimates. “In our case, it’s as simple as saying: ‘Don’t use the algorithm for acral lesions, in general, because I haven’t trained it for that.’ That’s it; it’s a limitation,” he says.

“The theory says that if 90% of my population” corresponds to white skins, “I must train” with those types “because prevalence is also important when it comes to making decisions. I must train in my environment,” Pereyra Rodríguez says. In the case of systems developed abroad, hospitals should ideally evaluate the performance of the algorithms on their own patient groups. López-Rueda also calls for “re-training with local data” before implementing any artificial intelligence: “It’s very expensive for both the company and the hospital, but that’s what would really work.”

“Biased algorithms rob patients of the potential benefits of this revolutionary technology.”

Even in Spain, the characteristics of the population also vary depending on the postcode. “If I develop software in the Hospital Clínic [in the centre of Barcelona] and implement it in Bellvitge [in the suburbs], it won’t work for me. If I do it the other way around, it won’t work either,” López-Rueda says. The consequences of algorithmic biases can be truly disastrous: patients can be harmed by an incorrect diagnosis. “Biased algorithms rob patients of the potential benefits of this revolutionary technology,” Adamson says, who points to the root of the problem: “The problem isn’t with the algorithm but with the thought and care going into designing and developing the algorithms.”

Methodology

This is the first health article in our algorithmic transparency series. We will soon publish new information on algorithm use in the National Health System. Eva Belmonte and David Cabo collaborated on this investigation, while Ter García, Javier de Vega, Olalla Tuñas and Ana Villota helped review the text.

Civio has confirmed the incorporation of the algorithms and AI systems mentioned in the article through various sources:

1) In the case of the Basque Country, through a public information request via the Transparency Law and three subsequent responses from the press office of the Basque Health Service (Osakidetza), which, at the time of writing, has not responded to our latest questions about Quantus Skin. We expanded our investigation to cover the Transmural Biotech contract, the Basque Country’s catalogue of AI algorithms and systems, the medRxiv pre-print by Trasmural Biotech employees, which is not peer-reviewed, and the subsequent peer-reviewed article in the International Journal of Environmental Research and Public Health (MDPI) evaluating Quantus Skin, published by dermatologists at the Ramón y Cajal Hospital in Madrid and optics researchers at the Complutense University of Madrid. We also interviewed the Transmural Biotech CEO, who referred to a metric favourable to Quantus Skin, known as negative predictive value (NPV), a parameter that does not answer the same question as sensitivity, but rather points to the probability that, if a test is negative, it is really negative. As explained in the article, sensitivity focuses on whether the system will detect all the patients who have melanoma. In other words, these metrics do not contradict each other, but they measure different things. Furthermore, although the company praises the preprint, and publishes data from the preprint on its website, including NPV, the manuscript itself states that “using online images does not constitute a clinical study” and that the findings must be validated in a real population.

2) In the Principality of Asturias, although the regional government initially told us that “there is currently no system in place in the health sector that uses artificial intelligence,” the press office of the Regional Ministry of Health subsequently confirmed the recent launch of several radiology systems, as well as the future inclusion in two dermatology systems.

3) In the Community of Madrid, the Department of Digitalisation initially reported seven AI projects in the Madrid Health Service, a figure that later rose to 70, as we confirmed through an information request. In the text, we only mention some of these systems, which have been implemented in several hospitals in the region.

4) In the Valencian Community, whose Regional Ministry of Health initially responded to our request for access by stating that it had not yet decided on incorporating AI systems. We later confirmed, thanks to GVA Confía, Valencia’s Registry of Algorithms, that there are indeed three active systems.

To prepare this report, we searched the scientific literature, mainly through PubMed, Google Scholar and preprint repositories such as medRxiv, bioRxiv and arXiv. We have also consulted health technology assessment reports, such as this document on the application of AI in breast cancer screening, specialised books such as this one published by the CSIC, other specialised documents such as this report by activist Júlia Keserű for the Mozilla Foundation, and we have also attended events such as the conference on AI and medicine organised by the Federation of Spanish Scientific Medical Associations (FACME). We have also consulted the few existing public records on algorithms and artificial intelligence systems, such as those launched in the Basque Country and the Valencian Community.

In addition to the documentary sources above, and the sources quoted in the text, we contacted multiple expert sources and we would like to thank them: lawyers Estrella Gutiérrez David and Guillermo Lazcoz Moratinos, dermatologist Tania Díaz Corpas, and mathematician and statistician Anabel Forte Deltell, helped us make the first visualisation as clear and accurate as possible.

We also sought evidence on the distribution of different skin types in Spain. A 2020 study in Acta Dermato-Venereologica indicated that the Spanish population is usually classified as phototypes II and III, which we corroborated with various dermatology specialists, who also helped us determine which population groups could be included in the lighter and darker phototypes.

Regarding medical image catalogues in dermatology and the limited annotation of skin tone in photographs, we searched for evidence in the scientific literature and, in addition to a 2022 Lancet Digital Health systematic review, whose data we used for the third visualisation, we also relied on a 2022 Journal of the American Academy of Dermatology systematic review, a 2021 JAMA Dermatology scoping review, and a 2022 Proceedings of the ACM on Human Computer Interaction analysis.

To identify the public contracts awarded to Transmural Biotech S.L. (B65084675), we searched the Public Sector Contracts Platform.

Regarding the first visualisation (sensitivity and specificity of the algorithms):

To create an educational visual representation of the efficacy results of the Quantus Skin algorithm at the end of the article, we approximated the probability distributions of both populations (healthy and diseased) using Gaussian curves.

To do this, based on the actual data from the study (55 cases of melanoma, of which 38 were true positives and 17 were false negatives; 177 healthy cases, of which 142 were true negatives and 35 false positives, with a diagnostic threshold of 67.33%), we mathematically calculated the parameters of two normal distributions that exactly reproduced the observed sensitivity (69.1%) and specificity (80.2%).

Results of the Quantus Skin efficacy study

These curves, although simplified, allow us to intuitively visualise how the algorithm distinguishes between the two populations and how the different types of diagnostic hits and errors (false positives and false negatives) respond to different diagnostic thresholds, showing the balance between the two metrics in a simple and visual way.

It is important to note that these graphical representations are approximations for purely informative purposes.

Regarding the second visualisation, about the underrepresentation of dark skin in the largest catalogue of dermatological images:

To design the Fitzpatrick scale (skin tone colour scale) in an educational manner, we used the RGB values from Table 1 published in 2017 in the book Cutaneous melanoma: Etiology and therapy by Codon Publications.

The choice of personalities from the worlds of sport and music as examples has been checked with dermatology specialists. The authors of the photographs used are (CC BY-SA 3.0):

-

Ed Sheeran: Harald Krichel

-

Taylor Swift: iHeartRadio CA

-

Rafael Nadal: Barcex

-

Rosario Flores: Fuenlabrada Town Council

-

Salma Paralluelo: Michael Emilio

-

Nico Williams: Maider Goikoetxea

In the visualisation, each square is equivalent to about 100 images collected in the ISIC.

Regarding the third visualisation, on the limited coding of skin tone in most medical databases:

Each group consists of 10,695 circles, each representing ten dermatological images included in a Lancet Digital Health systematic review. The percentages used in the visualisations can be found in the summary of the publication. From the different clinical information collected in the systematic review, we chose the three that we thought were most relevant and best suited to the research.

The three visualisations were developed with D3.js and Svelte.js.